Yes, finally the first real post of my blog :) This article summarize the NIC Teaming & Tagging support on Hyper-V. Scenarios has been tested on HP Blade systems with HP NCU utility. Windows 2008 Datacenter Core Edition has been used for the parent partition.

In order to check VLAN tagging with teaming 2 scenarios have been tested :

2. NIC Teaming with NCU and Tagging at HyperV Level (NOK)

As stated above only the first scenario works. This scenario creates lots of adapter overhead on the OS level. For instance lets assume that you have 2 physical interfaces which are teamed and you create 4 VLANs on top. After making the necessary configurations you have :

2 Interface for the actual pNICs.

1 Interface for Teamed NIC

4 Interface For the VLANs

4 Interface For the Virtual Switches

This creates some management overhead for the interfaces but this is the only supported scenario by Hyper-V currently.

Also with this setup the parent partition always have L2 access to all VLANs because the virtual network adapter at parent partition level is connected to the Virtual Switch by default. In order to create a External network without parent partition attached you can use the Poweshell scripts mentioned on the below pages.

Also after creating a virtual network you can disable this virtual interface. On server Core :

netsh interface show interface

netsh interface set interface name=”Name of Interface” disabled

In order to understand the networking logic in Hyper-V it’s strongly recommended to check the below document :

http://www.microsoft.com/DOWNLOADS/details.aspx?FamilyID=3fac6d40-d6b5-4658-bc54-62b925ed7eea&displaylang=en

As stated by the above diagram when you bind a virtual network to a physical interface, a Virtual Network Adapter has been created on OS level. This virtual adapter has all the network binding like TCP/IP. After this operation the existing Network Adapter for the pNIC has only a binding for the HyperV Virtual Switch protocol.

In order to make OS level application work over the new created virtual adapter make sure appropriate tagging has been created also on host level.

IMPORTAT NOTE: Make sure you don’t create any Virtual Switch on the pNIC that is used for communication between SCVMM and Hyper-V host. Leave at least one NIC or Teamed Interface for this communication.

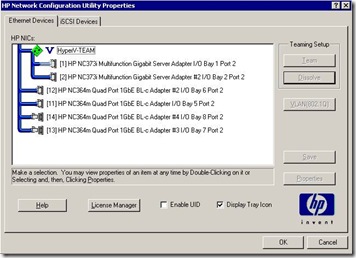

NIC Teaming and Tagging with HP NCU

HyperV has NO teaming capability at Hypervisor level like VmWare ESX/ESXi as mentioned in KB968703 (http://support.microsoft.com/kb/968703) :

Since Network Adapter Teaming is only provided by Hardware Vendors, Microsoft does not provide any support for this technology thru Microsoft Product Support Services. As a result, Microsoft may ask that you temporarily disable or remove Network Adapter Teaming software when troubleshooting issues where the teaming software is suspect.

If the problem is resolved by the removal of Network Adapter Teaming software, then further assistance must be obtained thru the Hardware Vendor.

This support has to be maintained at Hardware Level. For HP we used HP NCU for teaming purpose.

IMPORTANT NOTE : HP NCU have to be installed AFTER enabling HyperV role.

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c01663264/c01663264.pdf

http://support.microsoft.com/kb/950792

OS Level Settings For Teaming+Tagging

In order to check HyperV with Teaming + tagging :

2. HyperV role activated with necessary KB Updates.

http://support.microsoft.com/?kbid=950050

http://support.microsoft.com/?kbid=956589

http://support.microsoft.com/?kbid=956774

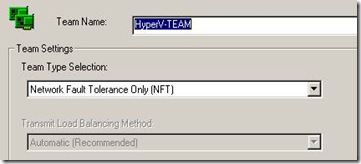

3. Using HpTeam Utility NFT based teaming has been configured.

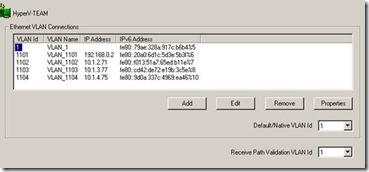

4. NCU Installed together with Broadcom and Intel Drivers. 5. VLAN1,1101,1102,1103,1104 has been setup on the teamed interface.

Hyper-V Level Settings For Teaming+Tagging

NOTE : Access host through VLAN option enables the parent partition talk with that VLAN.

2. On the HOST created to VMs for testing. Each VM has been connected to different virtual switch as below

3. After setting tagging both on Host and VM level ping between different VLANs is possible. (The switch has been configured for interVLAN routing)

NIC Teaming with NCU and Tagging at HyperV Level

2. HyperV role activated with necessary KB Updates

http://support.microsoft.com/?kbid=950050

http://support.microsoft.com/?kbid=956589

http://support.microsoft.com/?kbid=956774

3. NCU Installed together with Broadcom and Intel Drivers.

4. Only Teaming has been configured with NCU.

5. A virtual switch has been created at HyperV level and necessary tagging made for the Host Virtual Adapter.

6. Virtual guest machines has also configured with tagged vNICs.

7. Network connectivity between the VMs does NOT work.

Hi, have you try to use manual failover on VM teaming ?

ReplyDeleteWhen I ping my VM and I make a failover event on the NFT teaming I loose ping during 5 minutes. 5 Minutes is the time to my physical switch to generate a new Mac address Table.

If I launch a ping from the VM to another computer on my network the mac table is immediately updated and I can ping again this VM.

The problem seem to be that the NCU don't send any information to hyper-v about the changement when failover occure...

Schmurtz,

ReplyDeleteWe made many failover tests on our environment without any issue.

Can you please briefly explain me your environment. Is your servers directly connected to uplink switches or any interconnect in between ? Are you using the latest drivers for the NIC chipset you have ?